First Mile Observability and the Rise of Observability Pipelines

When people speak of observability (assuming they talk about it at all), they tend to focus on the "backend" - aka systems responsible for ingesting, storing, and querying observability data (metrics, logs, and traces). But this is only half the story.

For observability data to make it into these systems, it first needs to be collected, processed, and exported from the infrastructure and services that generate the data to where it will be used. This "front end" of observability is what is known as "first-mile observability". Solutions in this space have grown in scope in recent years due to the increase in volume (and cost) associated with modern observability data.

In this post, we'll go over first-mile observability, what it is, and the landscape of offerings that exist in this space today.

A Series of Pipes

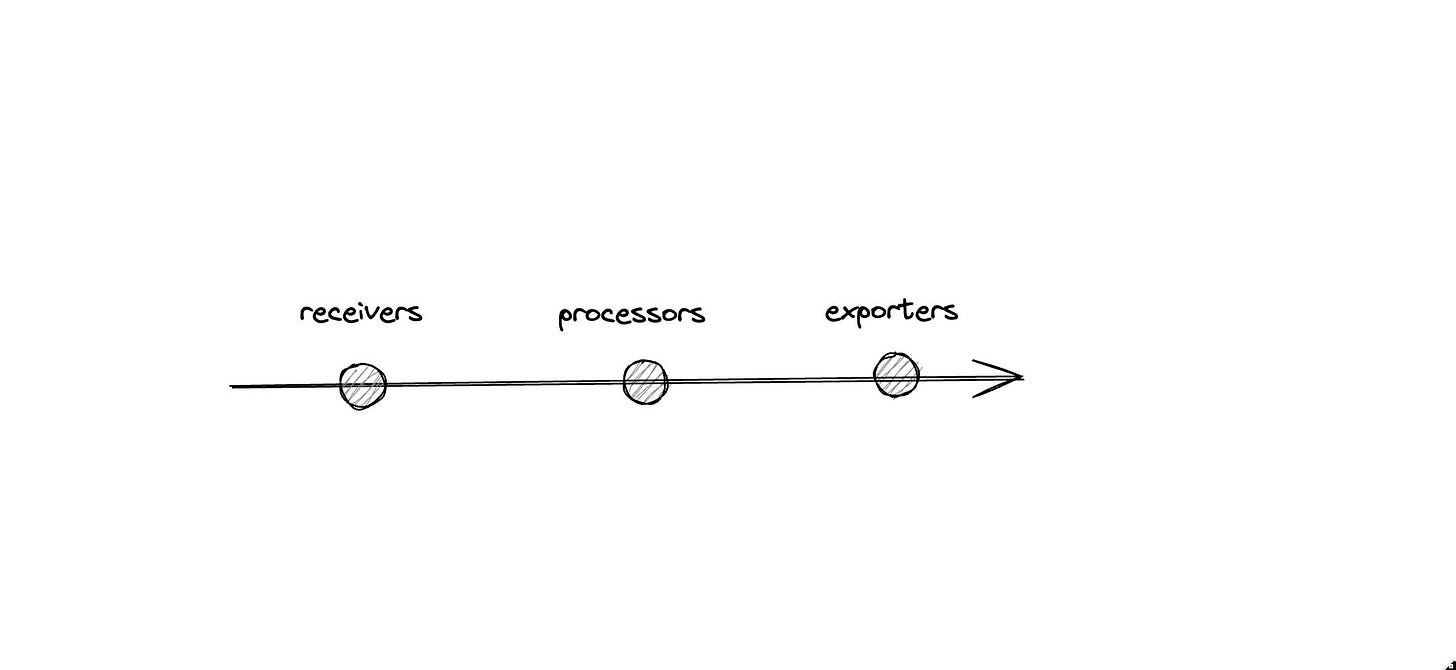

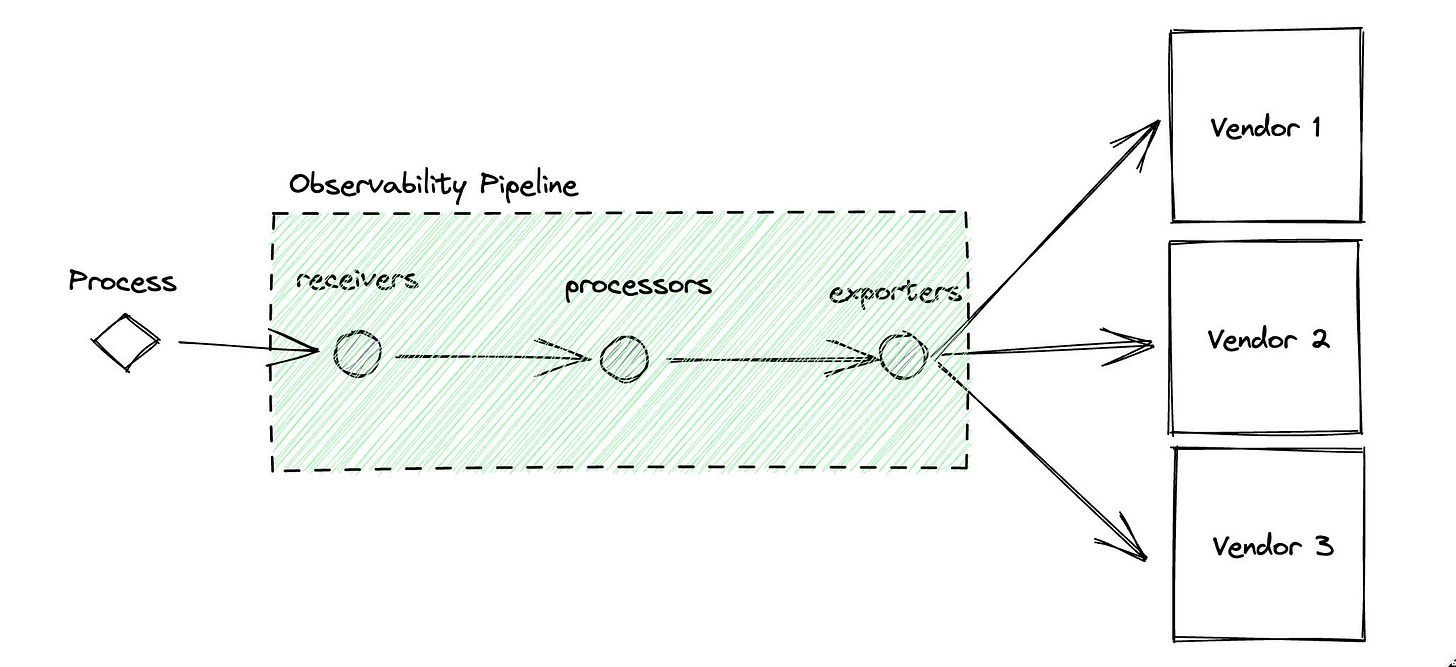

At its core, first-mile observability represents systems that facilitate the collection, processing, and exporting of observability data. They're centered around the following components:

receivers: push/pull data from different sources

processors: transform/filter/enrich/derive data in flight

exporters: send data to downstream destinations

NOTE: Different implementations have different names for these components. I'm using the terminology adopted by the Open Telemetry (OTEL) collector.

The combination of receivers, zero or more processors, and exporters, come together to create an observability pipeline.

Some examples of common pipelines:

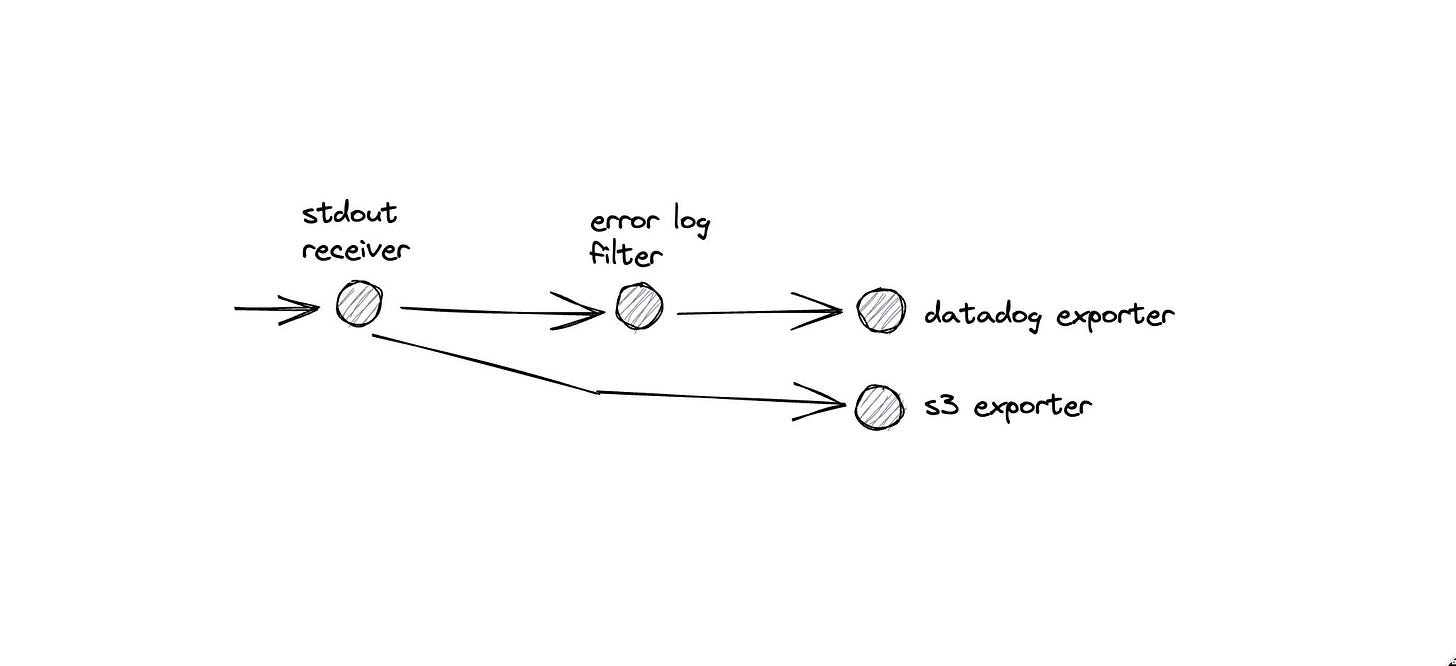

a pipeline that collects all log data and sends 100% of it for archival to S3 but only ERROR logs to datadog

a pipeline that adds host and container level information as custom attributes to each data point before sending it upstream

a pipeline that removes unused attributes and dimensions from observability data

Example pipeline that filters logs sent to datadog while sending everything to S3

First Mile Concepts

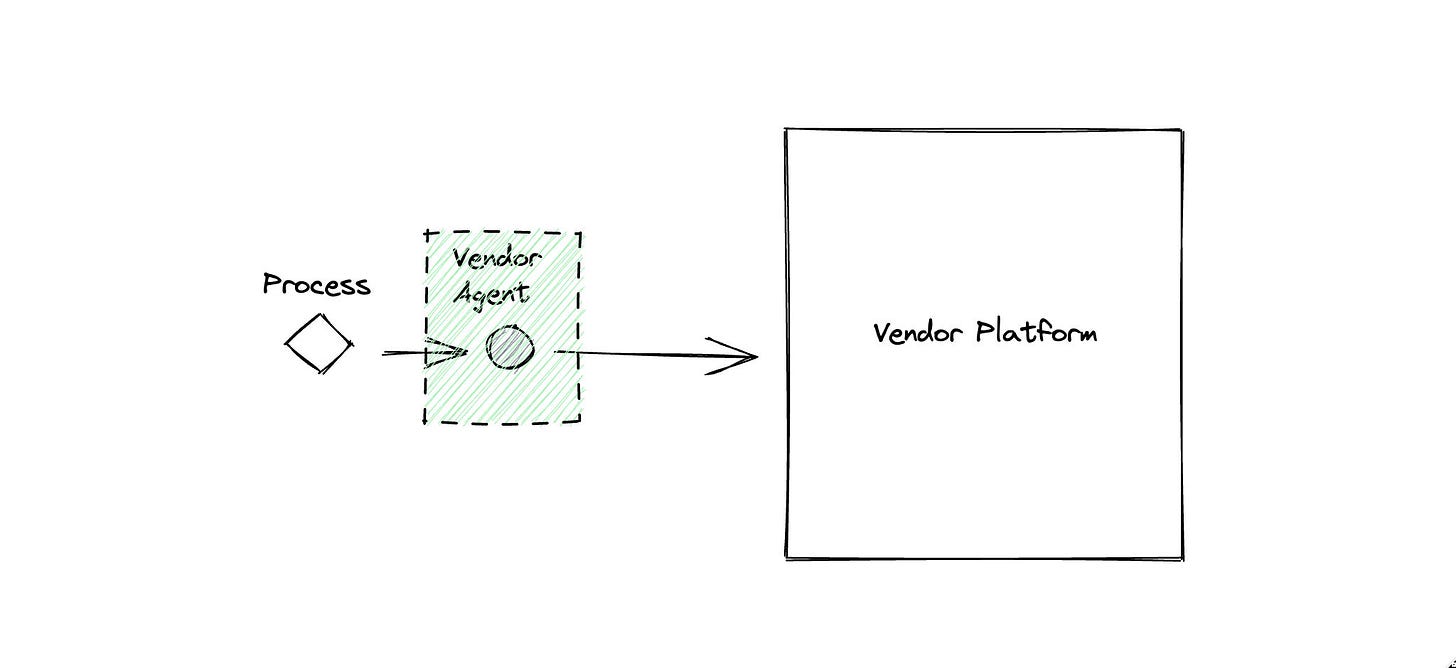

Agents

The most common deployment in first-mile observability is that of an agent. Agents tend to run on the same system that they are collecting data from. They are responsible for pulling in data, doing lightweight aggregations, and then sending the data off to a preset destination.

Every observability vendor has a custom agent.

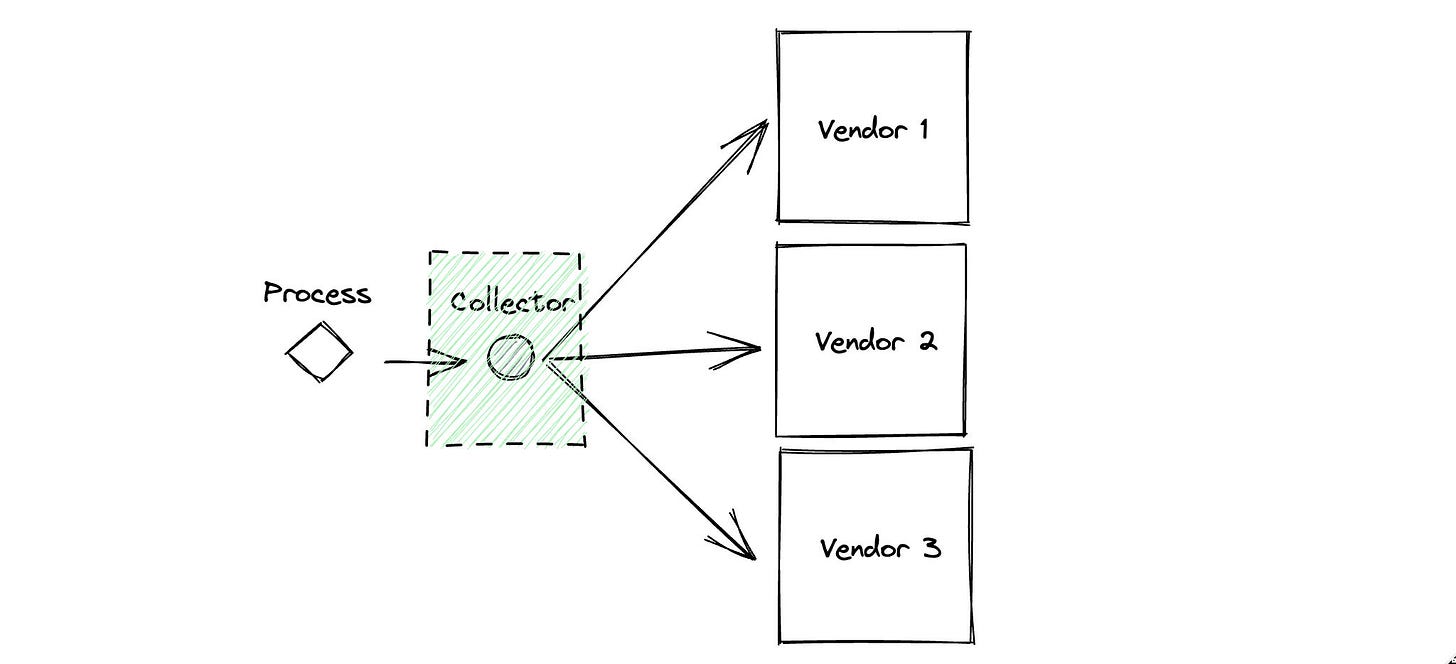

Collectors

A step up from the agent deployment is the collector deployment. Collectors can be deployed either on the same system that they are monitoring or as a standalone deployment. They aggregate observability data from various sources, can apply basic filters, and route data to one or more destinations.

FluentD and Fluent Bit are some of the most popular collector options with wide community adoption and extensive integration solutions.

Pipelines

When a collector grows up, it becomes an observability pipeline. Observability pipelines can be deployed on the same system, as a standalone deployment, or as a cloud endpoint. Observability pipelines can receive data from any source, apply near-arbitrary transformations on the data when it is in flight, and export it to any destination.

Vector is a popular open-source pipeline solution written in Rust that has widespread adoption.

First Mile Landscape

There are many solutions for observability pipelines - we'll go over some of the most popular solutions in both the open source and commercial sides of this space.

Open Source Solutions

In terms of open source, popular solutions include Fluent Bit, Vector, and the Otel Collector

Fluent Bit

released: 2015

language: C

pros: performance, Lua based transformations

cons: smaller ecosystem (compared to FluentD), harder to extend

Fluent Bit was released in 2015 as a lightweight version of FluentD. It has zero dependencies, is highly performant, and supports arbitrary transformations that can be authored with the Lua programming language. It also comes built-in for certain cloud vendors like AWS.

Vector

released: 2020

language: Rust

pros: performance, custom transformation language (VRL), acknowledgment and delivery guarantees

cons: smaller ecosystem, hard to write plugins

Vector was released in 2020 as a modern rust-based observability pipeline. It achieves even higher throughput than Fluent Bit (albeit with higher CPU consumption) and is easy to install and integrate. Users can transform data using the vector remap language - a custom DSL that is designed for transforming data while maximizing flexibility, runtime safety, and performance.

OTEL Collector

released: 2020

language: Go

pros: performance, large ecosystem, industry standard, easy to extend

cons: mixed stability

The OTEL collector was released in 2020 as part of the Open Telemetry (OTEL) project. OTEL is a CNCF initiative to provide a vendor-neutral standard for cloud-native observability. The OTEL collector can be deployed and used as either an agent, collector, or pipeline depending on how it's configured.

The OTEL collector is available in many different flavors. The core collector provides a stable core of built-in receivers, processors, and exporters to facilitate OTEL formatted data. The OTEL Contrib Distro contains the kitchen sink of integrations into every vendor and observability service under the sun. Vendors like Splunk and AWS also offer their own curated OTEL Distros that are specifically curated for their particular platform.

Users can transform data using built-in processors or use the OpenTelemetry Transformation Language (OTTL). This is a SQL-like declarative DSL optimized for transforming observability data. Note that OTTL is under active development.

Commercial Solutions

There are also multiple commercial offerings available in the observability pipeline space. Popular vendors include Cribl, Calyptia, Datadog, and Mezmo.

Cribl

founded: 2017

Cribl was started by a group of ex-splunk folks. Their original claim to fame was saving money on your Splunk bill. Cribl is known as the first major commercial offering in the observability pipeline space. They are an easy choice for the enterprise and companies that are heavily reliant on Splunk.

Calyptia

founded: 2020

Calyptia was founded by the authors of FluentD and FluentBit. They provide an observability pipeline solution built on top of said products. They are a good choice if you already use these technologies or operate on platforms that integrate natively with them.

Datadog

founded: 2010, acquired Vector in 2021

Datadog has dominated the modern observability landscape by positioning itself as the single pane of (expensive) glass for all observability needs. They acquired the company behind Vector in 2021 and have built a managed observability pipelines solution around it. They are a good choice if you're already using Vector and want native support for datadog.

Mezmo (formerly LogDNA)

founded: 2015, rebranded to Mezmo in 2022

Mezmo started as LogDNA with an exclusive focus on providing a logging pipeline. It has since expanded its scope and also rebranded itself to be a general observability pipeline solution. Mezmo is easy to get started and has a slick UI for adding filters and transformation. They have a proprietary agent for their logging pipeline but their observability pipeline can ingest data from a variety of different sources, including fluentd, fluent bit, and OTEL.

Nimbus

founded: 2023

Nimbus was started in 2023 by the author. It is a fully managed observability pipeline that helps organizations reduce data volume by 60% without losing signal. Nimbus analyzes your traffic and automatically recommends optimizations that reduce and aggregate data.

Choosing the Right Solution

There are many options for first-mile observability. The easiest and most common choice is to do nothing and use whatever agent(s) comes bundled with your vendor/solution of choice.

If that no longer suffices, some considerations for choosing an observability pipeline below:

For something performant that just works, consider Vector. Use DataDog for a managed version.

For integrating with the widest set of existing tools, consider FluentD and Fluent Bit. Use Calyptia for a managed version.

For enterprise and Splunk buildouts, consider Cribl. There is no open-source equivalent though Cribl does offer a free tier.

For a good mix of everything as well as future-proofing with Open Telemetry, use the OTEL collector.

Final Thoughts

In 2023, not only is observability data growing at a dizzying rate, but so are vendors that help you manage it. Whereas the "backend" space has a vast selection of options for both incumbents and newcomers, the "frontend" space did not develop commercially until 2017 with the launch of Cribl. Now six years later, observability pipelines are only now starting to gain traction. I'm personally excited by the potential of OTEL - its CNCF status, standards-based implementation, and transformation language position it to be a dominant leader in this space.