A Deep Dive into Observability Pricing

Everything You Never Wanted to Know About Logging Costs

There are certain things in life that I hate doing. Taxes. Getting my teeth pulled. Calculating observability costs.

This post will be focused on the last item, calculating observability cost. Specifically, we'll focus on the usage based pricing schemes of commercial observability vendors. Since observability encompasses such a wide array of services, we'll limit the scope of this post to logging for the sake of brevity. That said, the models we’ll discuss for logging are, in broad strokes, also applicable for metrics and traces.

Pricing Models

When it comes to logs, there are two dominant pricing models employed by the industry:

Volume based pricing: you are charged in proportion to the total amount of data ingested, usually measured in gigabytes/month.

Resource based pricing: you are charged in proportion to the amount of resources it takes to process your data, usually measured in compute consumption

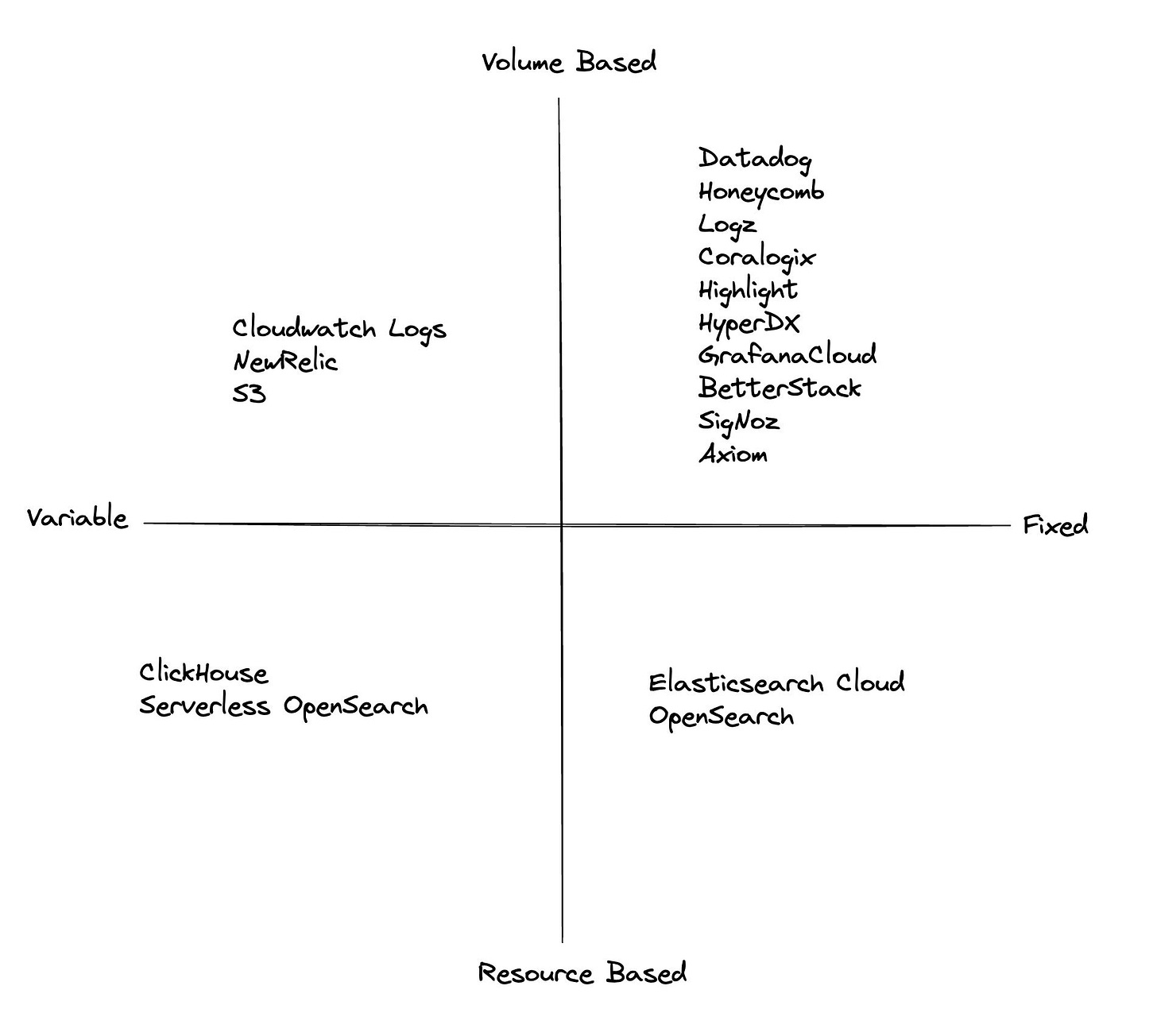

These two pricing models can be further split in terms of fixed usage vs variable usage.

Fixed cost usage: you are charged a higher per unit fee upfront but have no consumption based fee afterwards

Variable cost usage: you are charged a lower per unit fee upfront but pay a variable amount based on consumption

All together, you can plot vendors along the following two by two grid

Volume Based Pricing

In volume based pricing, you are charged per gigabyte of log (or some gigabyte equivalent). Logs are metered at the following stages:

Ingest: This covers the ingestion of the logs as they are sent from your service to the vendor

Storage: This covers the storing and indexing of logs as they are processed by the vendor

Query: This covers executing search queries by users over the stored logs

Ingest

The ingest stage is generally the cheapest stage of the log processing pipeline. This is because vendors want you to send them more data as this leads to lock in. Ingest to Amazon S3 is free. Ingest to datadog is $0.10/gb.

Many vendors combine ingest and storage. For example, ingest and storage in New Relic starts at $0.30/gb.

Note that there are violators of the rule of cheap ingress. Amazon Cloudwatch Logs charges $0.50/gb for ingest and $0.03/gb/month for storage.

Storage

The storage stage is generally where vendors make the bulk of their margin. Storage is typically measured in gb/month or per million events.

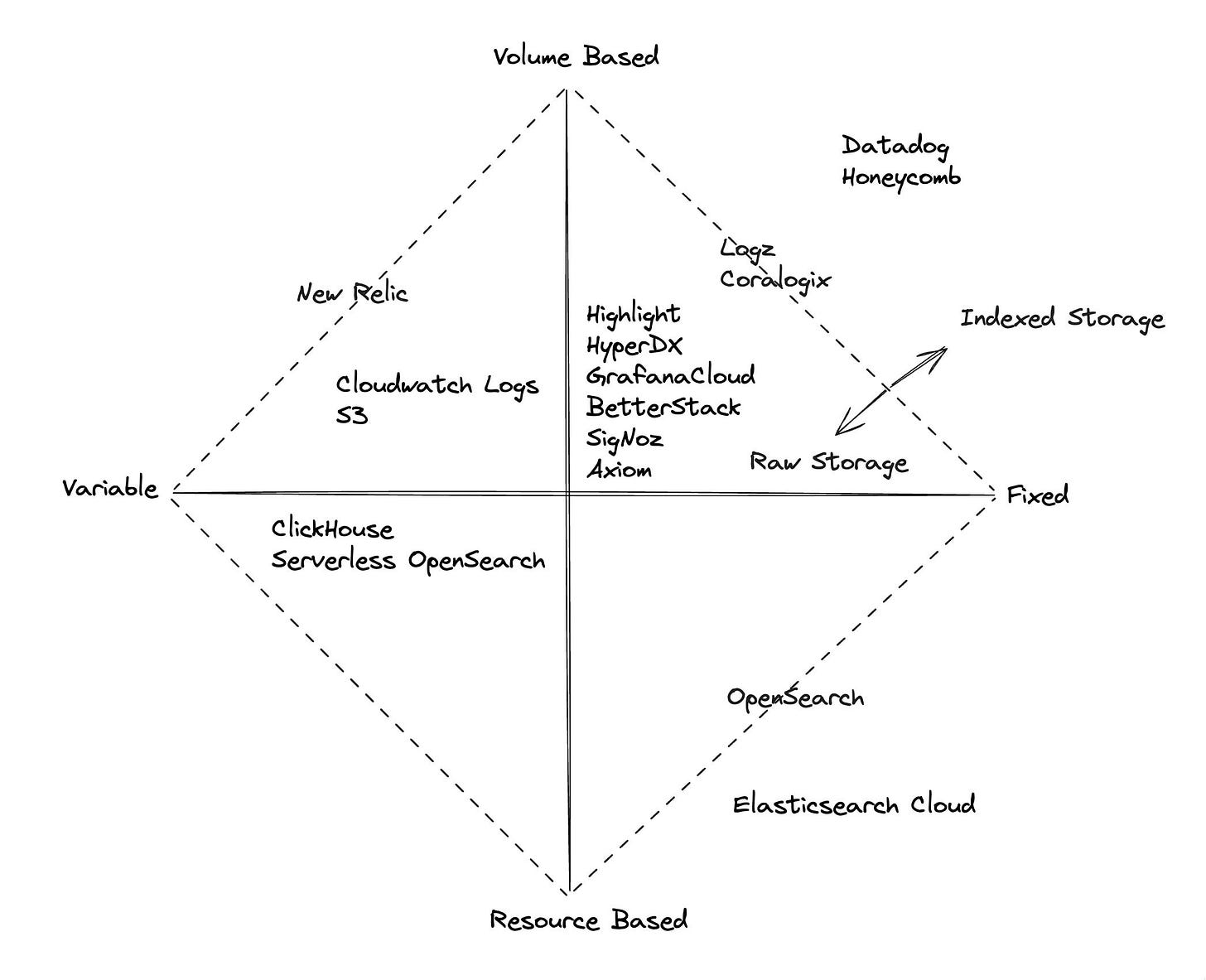

When storing log data, there is a difference between what I refer to as raw storage and indexed storage.

Raw storage is cheaper than indexed storage by an order of magnitude but usually involves variable usage costs at query time. Indexed storage is more expensive but has typically has no variable cost at query time.

Many vendors now also offer different storage tiers where you can pick what portion of your data is indexed.

Below is an updated grid with vendors divided by storage tier. Vendors at the intersection of the dotted line offer different storage tiers or have per seat pricing.

Query

The query stage is generally the hardest to calculate. Vendors like datadog that offer indexed storage bake the cost into their storage fee which means there is no additional query fee.

Vendors that offer raw storage charge a variable cost for searching over the data. This is usually charged by per gb scanned. This isn't an arbitrary charge - the reason that "raw" storage is cheap is because it is not indexed (which makes it cheap to store) and that extra compute needs to be expended to run queries over the data.

Resource Based Pricing

In resource based pricing, you are charged for the underlying processing you consume when working with logs. This is usually measured in terms of raw instances or some abstract compute unit.

In fixed cost usage, you pay for the underlying instances that are responsible for ingest, storage and query. This means you need to determine and allocate enough compute to satisfy your performance, availability, and durability concerns. This involves more work upfront but is usually cheaper, if properly provisioned, than a volume based pricing model. This is because the vendor, for volume based pricing, needs to assume the worst case (100% utilization with maximum availability and durability) while maintaining a margin - few workloads actually consume that in practice.

In variable cost usage, you pay for the underlying compute. For example, Amazon's Serverless Opensearch Service charges customers based on OCUs (OpenSearch Compute Units).

One OCU comprises 6 GB of RAM, corresponding vCPU, GP3 storage (used to provide fast access to the most frequently accessed data), and data transfer to Amazon Simple Storage Service (S3)

AWS will autoscale your compute depending on your usage. You tradeoff needing to manually allocate resources but end up paying a variable premium on the compute.

Price Modifiers

In addition to volume vs resource and fixed usage vs variable usage, there are specific options that you can buy into which multiple your costs across all pricing models.

First, there's the annual plan vs monthly plan. Annual plans lock you in for a certain usage for a year but can be 30% cheaper than the monthly plan. While a 30% discount seems great, effective use of an annual plan involves being able to effectively forecast your log usage across all the factors we've so far discussed. I have yet to see a company at any scale be able to do this effectively.

There's also enterprise discounts at higher volumes. These discounts can be significant but like the annual plan, require hefty upfront commitments.

Second, there's retention - the number of days a vendor will keep your logs indexed. This can be as short as a day to as long as indefinite. The higher the retention, the more it will cost to store the logs.

Finally, there's "value add" options that vendors provide like "sensitive data scanning" and extra compliance guarantees. These options can double the price per gigabyte during ingestion and storage.

Pricing Comparison

At this point, you might have an inkling of why observability pricing is hard to calculate, never mind compare. No two vendors have the same pricing model and they each charge for a different permutation of usage.

Nevertheless, we're going to do a best effort attempt by making a number of assumptions to normalize costs across vendors.

Volume Based Fixed Cost Comparison

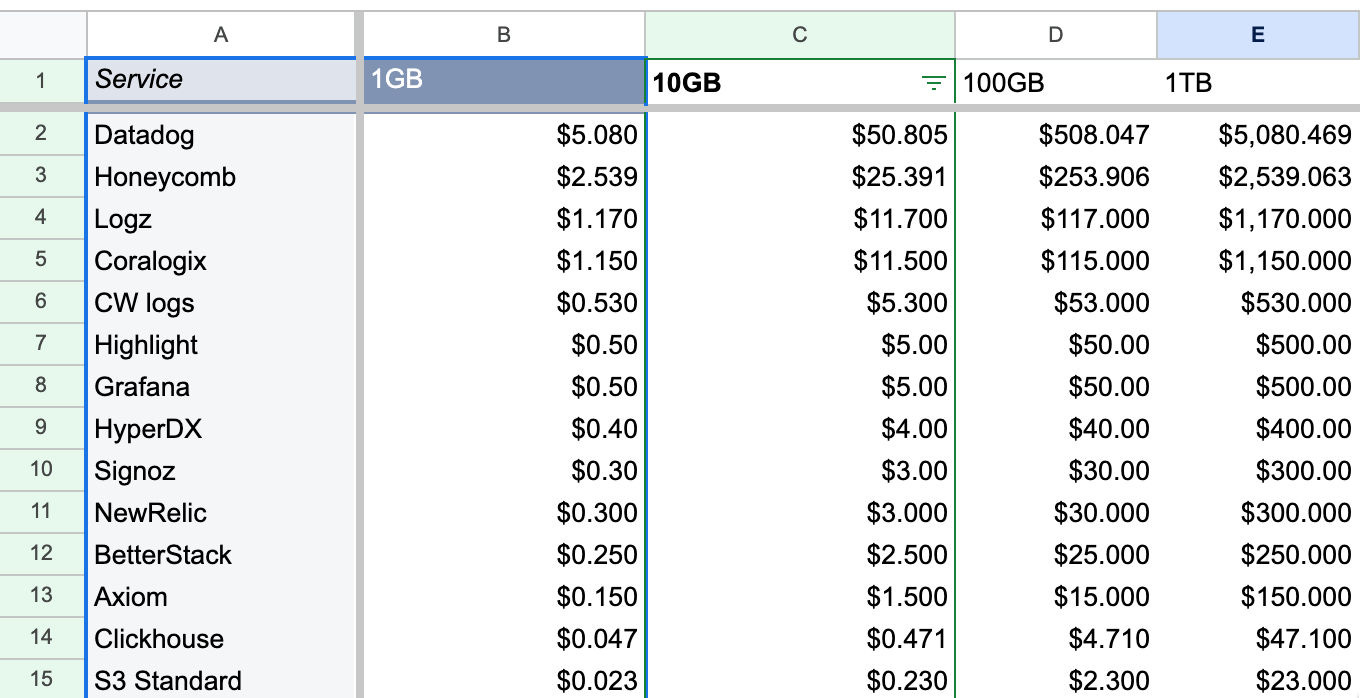

The following is a comparison of just the volume based pricing of different vendors in terms of cost per gigabyte per month. It does not cover vendors that use resource based pricing and does not take into account the variable based portion of the cost.

We make the following assumptions when calculating costs:

for vendors that have a fixed price component ($X for first Y GB and then $Z/gb/month afterwards) - we are ignoring this as it an insignificant part of the cost at scale

for vendors that have event based pricing ($X per million events), we're assuming that the average event is 512 bytes and normalizing to gb/month

for vendors that have indexed storage with variable retention prices, we normalize to 14day retention

for vendors that have multiple tiers of storage (eg. hot and cold), we compare prices for "hot" storage

Some observations:

indexed storage vendors have the highest price per byte and raw storage vendors have the lowest.

there is a 33X difference between the most expensive vendor (Datadog at $5.08/gb/mo) and the least (Axiom at $0.15/gb/mo)

the new generation of vendors that have started within the last 10 years (eg. axiom, signoz, hyperdx, etc. ) have drastically lower volume based prices ($0.50/gb/mo or lower)

Volume Based Variable Cost Comparison

Only looking at fixed cost can paint a distorted picture of the vendor landscape - variable costs can easily exceed the fixed cost depending on the workload.

We make the following assumptions when calculating costs:

variable usage per month is

total team size * volume of data ingested * 10(this makes the assumption that each team member, on average, queries 10X the monthly ingest volume each month)S3 query cost is modeled as the cost of scanning data using Athena ($0.005/gb scanned)

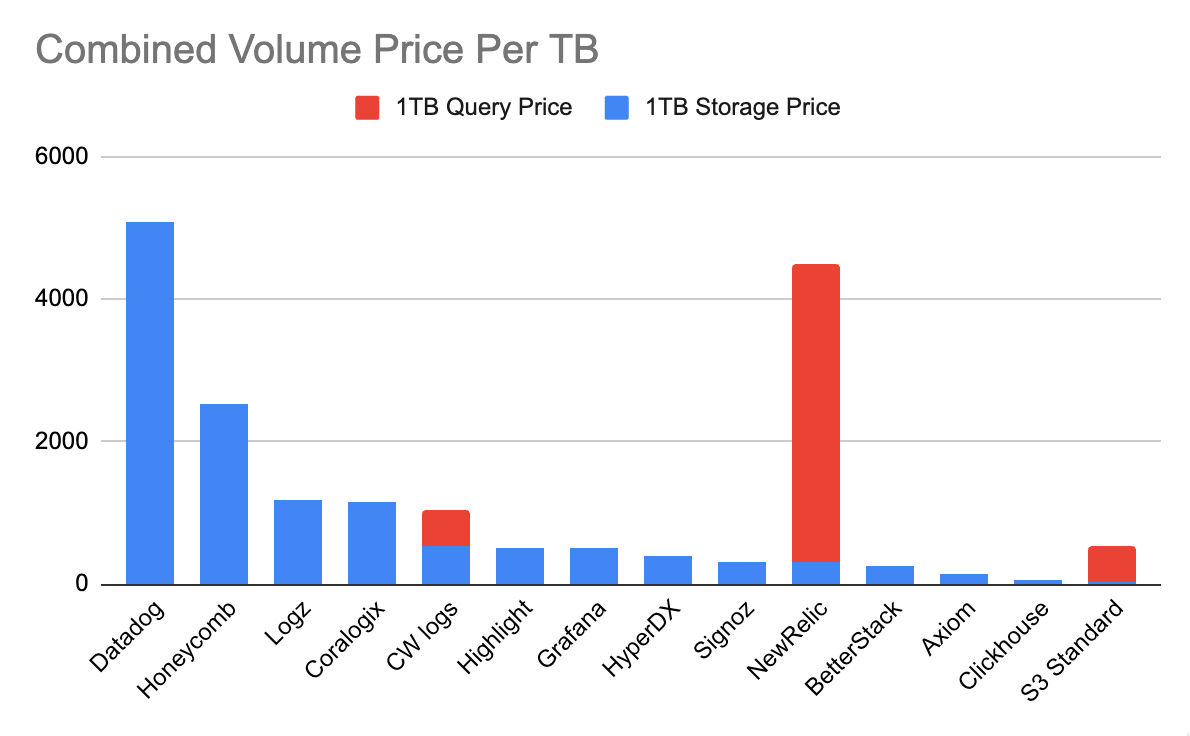

The following is a chart of vendors that have a variable cost during query time. We model a team size of 10 with a monthly data volume of 1TB.

Some observations:

Cloudwatch Logs cost doubles as the variable cost just about equals the volume cost

Grafana Cloud has a fair use policy that makes it free to query 100X the monthly volume - this is why you don't see a variable cost component for Grafana at 10 people. Note that if exceeded, Grafana becomes extremely expensive at query time ($0.40/gb scanned)

New Relic charges per seat - at $418.80/full platform user, the total price increases by over 13X

S3 query cost becomes the bulk of any logging service built on top of it

Volume Based Combined Cost Comparison

The following two charts attempt to show the total cost of ownership when accounting for both the fixed and variable portions of volume based pricing when processing 1TB of logs per month for a team of 10.

Some observations:

New Relic has the biggest jump in pricing due to their per seat model

Cloudwatch logs and S3 become drastically more expensive when taking into account querying

Note that there are many "raw storage" vendors that do not (currently) have a variable based cost associated with querying (eg. Highlight, HypderDX, Signoz, BetterStack). These vendors are all built on top of either S3 (or a cloud vendor equivalent) or Clickhouse (which leverages S3).

This is most likely due to a combination of the following:

our estimate of queries scaling at 10X monthly ingest volume per user is far too conservative

the naive assumption of using S3 + Athena to model S3 query is far too conservative

the aforementioned vendors are able to leverage caching, pre-computation, and other optimizations to drastically reduce the cost of serving queries

the aforementioned vendors are all "new" to the scene (started within the last 5 years) and don't have customers of the scale and workload that break their current pricing model

Conclusion

Pricing is tricky. Usage based pricing means you pay little when you're small but that your costs can grow exponentially if you scale. This makes it hard to compare or forecast.

If you're just starting out, it probably doesn't matter because costs are minimal and you will get more leverage by focusing on your business. If you're a growing or mature organization and you realize that observability is over 20% of your infra budget or past the seven figure range, it might be worthwhile to look into optimizations (eg. see Datadog's $65M/year customer)

The kicker is that by the time you want to optimize is also when you're most locked in. Existing instrumentation, training, run books, dashboards, alarms - all this and more needs to be migrated at scale without breaking existing services or slowing down product velocity. To switch might mean dedicating a two pizza team for an entire year to deliver an outcome that in all likelihood will be worse than what you have in place today. This is why its worth understanding not just what you're costs are today but how they will scale when you grow.

If you're already in too deep or you just want someone to make the existing costs go away - check out Nimbus (disclaimer: I'm the founder). The Nimbus platform helps companies reduce logging costs by 60% or more with no migrations or code changes. Our optimization engine automatically analyzes incoming logs (metrics and traces available in limited preview) to identify common patterns and create transformations that reduce log volumes without dropping data. If controlling observability costs is a concern, also feel free to reach out directly to kevin(at)nimbus.dev

Why haven't you included Splunk in this comparison? I'd think that was one of the (if not the biggest) players in this field!?

Do you think adoption of OTel standards will alleviate some of this cost/complexity? https://danktec.substack.com/p/logs-are-boring-and-expensive